Researchers at the Massachusetts Institute of Technology (MIT) and the MIT-IBM Watson AI Lab believe that putting deep learning into the analog realm will be the key to both increasing the performance of artificial intelligence (AI) systems and dramatically improving its energy efficiency — and have come up with the hardware required to do exactly that.

“The working mechanism of the device is electrochemical insertion of the smallest ion, the proton, into an insulating oxide to modulate its electronic conductivity,” explains senior author Bilge Yildiz, professor at MIT, of the processor the team has developed. “Because we are working with very thin devices, we could accelerate the motion of this ion by using a strong electric field, and push these ionic devices to the nanosecond operation regime.”

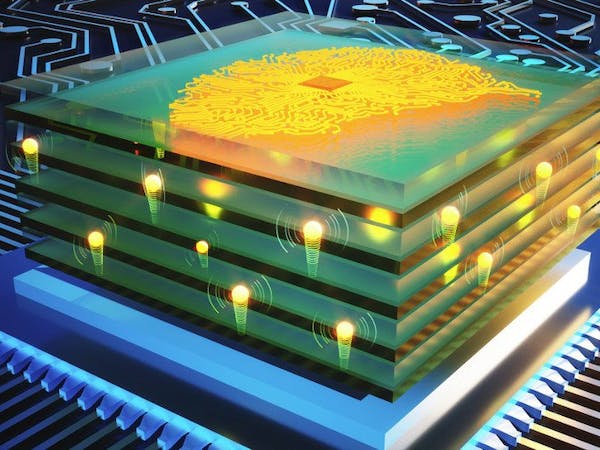

Tiny protonic programmable resistors could drive future AI systems faster and more efficiently than ever before. (📷: Onen et al)

The processor is based on earlier work to develop an analog synapse, but with a significant speed boost: the new version of the device, which is made up of arrays of protonic programmable resistors, operates a million times faster than previous versions — and are 1,000 times smaller than and operate 10,000 times faster than biological synapses like those found in the human brain.

Getting such technology out of the lab is always a challenge, however, but here the team claims to have made a breakthrough: the new device uses materials compatible with standard silicon fabrication techniques, allowing it to be produced in existing fabs and scale down to nanometer process nodes—meaning, in theory, it can be integrated into commercial processors to accelerate deep-learning workloads.

“We have been able to put these pieces together and demonstrate that these devices are intrinsically very fast and operate with reasonable voltages,” says senior author Jesús A. del Alamo, MIT professor. “This work has really put these devices at a point where they now look really promising for future applications.”

The technology has proven itself suitable for neural network workloads, including one trained on the MNIST dataset. (📷: Onen et al)

“Once you have an analog processor,” claims lead author Murat Onen, “you will no longer be training networks everyone else is working on. You will be training networks with unprecedented complexities that no one else can afford to, and therefore vastly outperform them.” In other words, this is not a faster car, this is a spacecraft.”

The team’s work has been published in the journal Science under closed-access terms.

Main article image courtesy of Ella Maru Studio and Murat Onen.